Object-centric Task Representation and Transfer using Local Reference Frames

Local reference frames from online sensor data

Motivated by IntelliMan’s use cases on manipulation in daily life kitchen activities and fresh food handling, recent work at the Idiap Research Institute introduces local reference frames computed from camera or tactile sensor measurements. These frames provide robots with a consistent, geometry aware sense of direction on and around curved objects.

Instead of relying on a single fixed robot frame, a local reference frame is built at each point on or near the object. These local frames capture directions such as “along the surface” and “toward or away from the object”. With these frames in place, transferring a learned task from one object to another becomes a matter of replaying the task in the local reference frames of the new object. The structure of the skill stays the same, while the actual motion adapts to the new geometry.

Reusing a single skill on many objects

Because movements are defined relative to the object, not to a fixed robot frame, the same task description can be reused on fruits and food items that are longer, shorter or slightly deformed. The robot automatically adjusts its motion to the new shape, while the overall peeling pattern remains the same. This is a step toward teaching a robot a skill once and then reusing it on many different but related objects.

Reusing a single representation across many skills

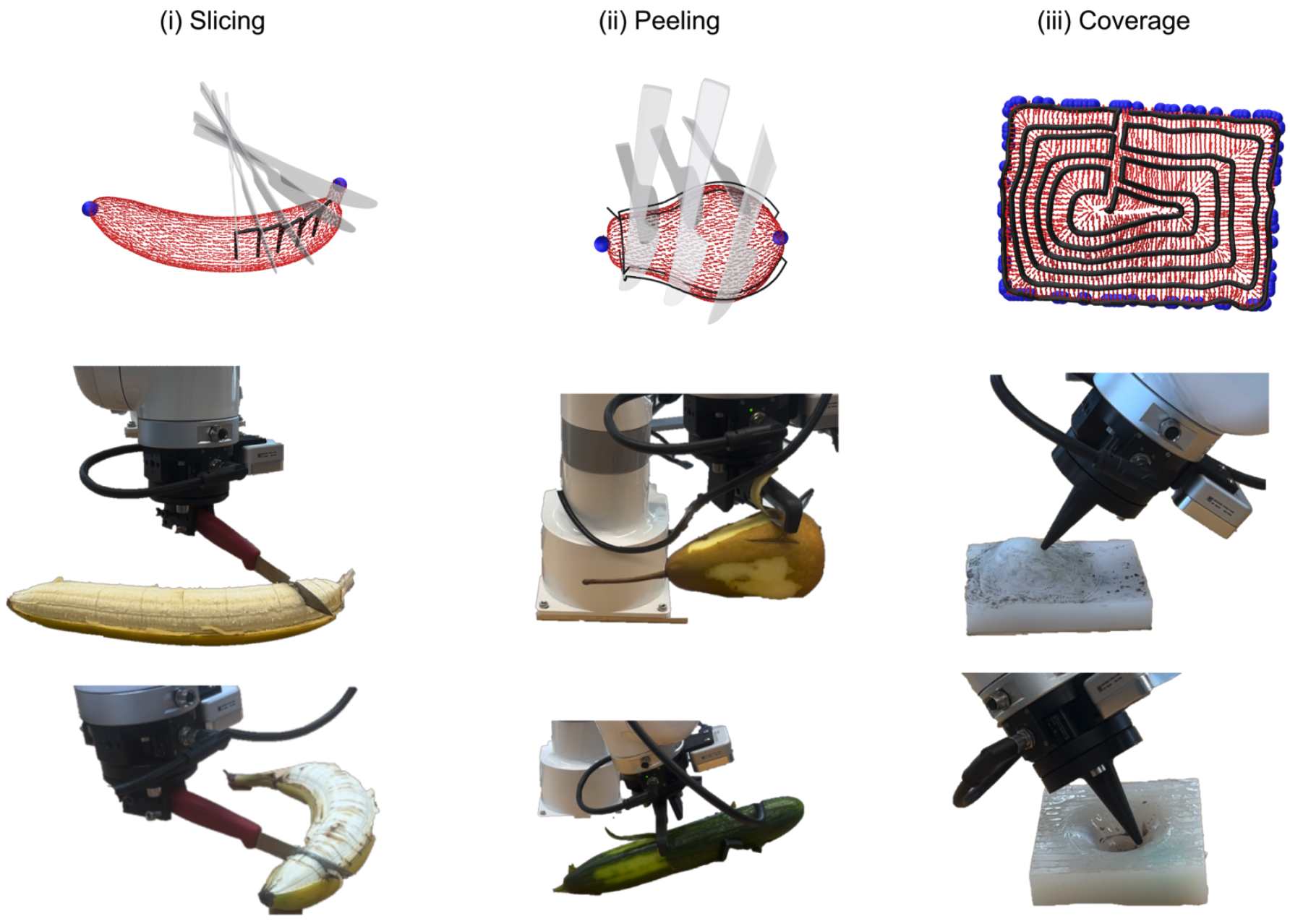

The same geometry aware representation can also support very different skills without redesigning everything from scratch. Once the robot has local reference frames around an object, it can use them to peel along the surface, to perform gentle coverage motions for inspection or cleaning, or to define precise cutting directions for slicing. What changes is the objective and the motion pattern, not the underlying way the robot “understands” the object.

Video presenting various experiments on curved objects from everyday life:

Dissemination Activities

This line of work has been showcased in various venues:

- an extended abstract presented at ICRA@40 (https://arxiv.org/abs/2411.02169)

- a workshop paper presented at IROS 2025 (https://openreview.net/forum?id=gkGI7ZJvu2)

- a live demonstration during the Swiss Robotics Day 2025 (https://swissroboticsday.ch/srd25/)

- a journal paper currently in preparation (https://arxiv.org/abs/2511.18563)